My look back at 2025 concludes with a review of my most popular Substacks of the year. Much like my top ten blog posts, lawful access, privacy and digital policy were the most popular issues, though Substack also included a focus on Quebec’s Internet streaming legislation and multiple posts on the digital services tax.

Latest Posts

The Year in Review: Top Ten Law Bytes Podcast Episodes

The final Law Bytes podcast of 2025 released last week took a look back at the year in digital policy. With the podcast on a holiday break, this post looks back at the ten most popular episodes of the year. Topping the charts this year was a discussion with Sukesh Kamra on law firm adoption of artificial intelligence and innovative technologies. The episode is part of the Law Bytes Professionalism Pack that enables Ontario lawyers to obtain accredited CLE professionalism hours. Other top episodes focused on digital policy under the Carney government, episodes on privacy law developments and a trio of episodes on Bill C-2, the government’s lawful access bill.

The Year in Review: Top Ten Posts

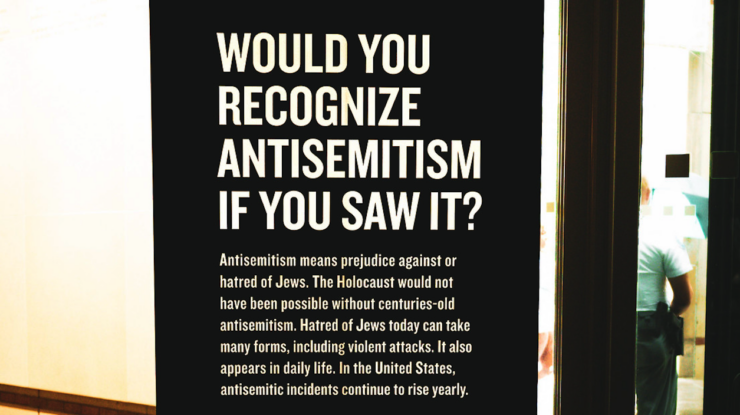

This week’s Law Bytes podcast featured a look at the year in review in digital law and policy. Before wrapping up for the year, the next three posts over the holidays will highlight my most popular posts, podcast episodes, and Substacks of the past year. Today’s post starts with the top posts, in which two issues dominated: lawful access and antisemitism. While most of the top ten involves those two issues, the top post of the year featured an analysis of the government’s approach to the digital services tax, which ultimately resulted in an embarrassing climbdown by the government.

The Law Bytes Podcast, Episode 254: Looking Back at the Year in Canadian Digital Law and Policy

Canadian digital law and policy in 2025 was marked by the unpredictable with changes in leadership in Canada and the U.S. driving a shift in policy approach. Over the past year, that included a reversal on the digital services tax, the re-introduction of lawful access legislation, and the end of several government digital policy bills including online harms, privacy, and AI regulation. For this final Law Bytes podcast of 2025, I go solo without a guest to talk about the most significant developments in Canadian digital policy from the past year.

Confronting Antisemitism in Canada: If Leaders Won’t Call It Out Without Qualifiers, They Can’t Address It

The devastating consequences of the rise of antisemitism is in the spotlight this week in the wake of the horrific Chanukah Massacre in Australia over the weekend. In addition to my post on the issue, I appeared yesterday on CBC Radio’s syndication, conducting 14 interviews in rapid succession with stations from coast to coast [clip here]. Most of the interviews followed a similar script, focusing on the rise of antisemitism in Canada as documented by Statistics Canada, lamented the troubling range of violent antisemitic incidents (including the Ottawa grocery store stabbing and the Toronto intimidation marches in Jewish neighbourhoods), and described life as Jew in Canada in 2025, which invariably necessitates a police presence at schools and synagogues alongside measures to hide Jewish symbols and identity in public.